This essay is part of a larger series, based on a series of talks I've given over the years. You can read it on its own, or start here for full context.

Why focus on ambient computing, vs the many other things to which you could devote your short time on this earth?

That's an excellent question worthy of a thoughtful answer. So often I think the answer is assumed: we'll have better lives, be better humans, and that's certainly true. However, I have a very practical answer. I want humans, and hopefully earth species, to become galactic citizens.

You may disagree with me on this as the most important goal, and that's fine. I believe this goal helps many other goals near and dear to earth that you may value more: in order to truly comprehend and be able to traverse the universe and any life forms we meet, we will have to start very small. Not die, not destroy the earth, evolve past our inherehent xenophobia to make friends, and frankly, we'll just have to learn a lot more about the actual universe, not just the earth-centric bubble we are beginning to understand now.

All of these are simple goals to write out, but require humans to think longer-term than our current evolution requires of us.

How do we do succeed? Well, as we know from the explosion of technology and human life improvements (and sometimes major fuckups) over the last five hundred years, knowledge transference is key. We can trace these knowledge jumps to a different sources: increased literacy, access to new information, new peoples, new lands, or an adjacent possible simultaneously achieved by a different folks around the same time.

Regardless of the source of the jump, when it comes to dissemination, the more shared language and literacy we have, the faster we go. We started with the spoken word, moved on to written, then printed, then mass literacy. It corresponds with eras: Kerala School, Tang Dynasty, Renaissance. Now today, we have the technology era, and of course on a wider scale, the anthropocene era.

I will not try to convince you of the efficacy of shared knowledge with examples. They are too numerous to mention. The the fact that a majority of humans on the planet will have access to this document and be able to read at least a rough translation of it in the language or mode of their choosing is example enough.

If we believe that knowledge transference is key to great leaps in technology, well, computers are great for knowledge transference:

- Electromagnetic radiation beamed into our eyeballs: We have the ability to beam pure energy into our eyeballs in any form we choose, in various dimensions. Everything from movies to email to digital objects rendered to look as real as our environments.

- Ambient sound indicators: Although we're talking about computers here, sound literacy extends beyond digital devices to many human-made technologies. A thousand or ten thousand years ago you had mostly natural noises, and maybe a few human designs, like church bells or war horns. Now humans are literate in the meaning behind many more noises: ambulances, text notifications, the Tetris song. Better knowledge transference, again.

- Ultra-efficient physical inputs: From swipes to keyboards to voice input, we are more efficient than any humans in history when we use a computer.

- Networked: Almost all computing devices made today are designed to be able to to transfer data to each other, down to simple electric plugs.

However: computers are a double-edge sword, as we know: humans may have access to information now that they can't make sense of, because literacy itself is a technology that is unequally distributed. Just because you can read a news article doesn't mean you are equipped to understand who wrote it, and why: the overall context is requires a literacy of its own.

This is why I care about making computers more accessible to humans.

So what's the holdup? Why aren't we well on our way to galactic citizenship, now that computers are ubiquitous? Well, there's many reasons, but here are a few.

First, not everyone is computer literate, much less computer fluent. Even if someone uses a computer every day, they might not be able to use it to the maximum of their intelligence, ability, and imagination.

Bad actors are another problem, especially with our current era of computer literacy. Unfortunately, only is this not my area of expertise, I don't think most of the tech leaders out there today are good at it, either, except perhaps ones in security. Tech folks tend towards optimism, inspired by the good we think technology can provide. We do not naturally think through the potential misuses of the tech. I would go so far as to say that I personally am incapable of doing so quickly and effectively without serious coaching. But the evidence is clear: bad actors exist, technology will always be misused, and the best course of action is to accept the reality and hire the right people who understand the potential risks, and can help build the right defenses against that misuse.

Finally, computers themselves. We had dual goals. We named them: computers, devices to help us compute. But what they truly are are brain knowledge dump machines: computers now hold everything from your last text to drunken party photos to your credit card information to every frame of Wall-E.

But, humans are adaptable, and because computers are our mechanical doppelgangers, they are adaptable too. Humans dream of how we want the world to be when you have this kind of computing power, and can do more with it. This is why I love the digital realm, as it were.

Maybe this isn't specific enough. Let's try again.

And the closer we tie human perception and computer perception, the easier it is to express what we know to other humans through this human-friendly conduit.

But this isn’t just internet level stuff. This is the stuff of dreams, of visceral imaginings. This is the kind of stuff that means when you fly in dreams and you fly in VR, both feel good and natural somehow. This base level, animal brain, impossible to express via other mediums, shared understanding.

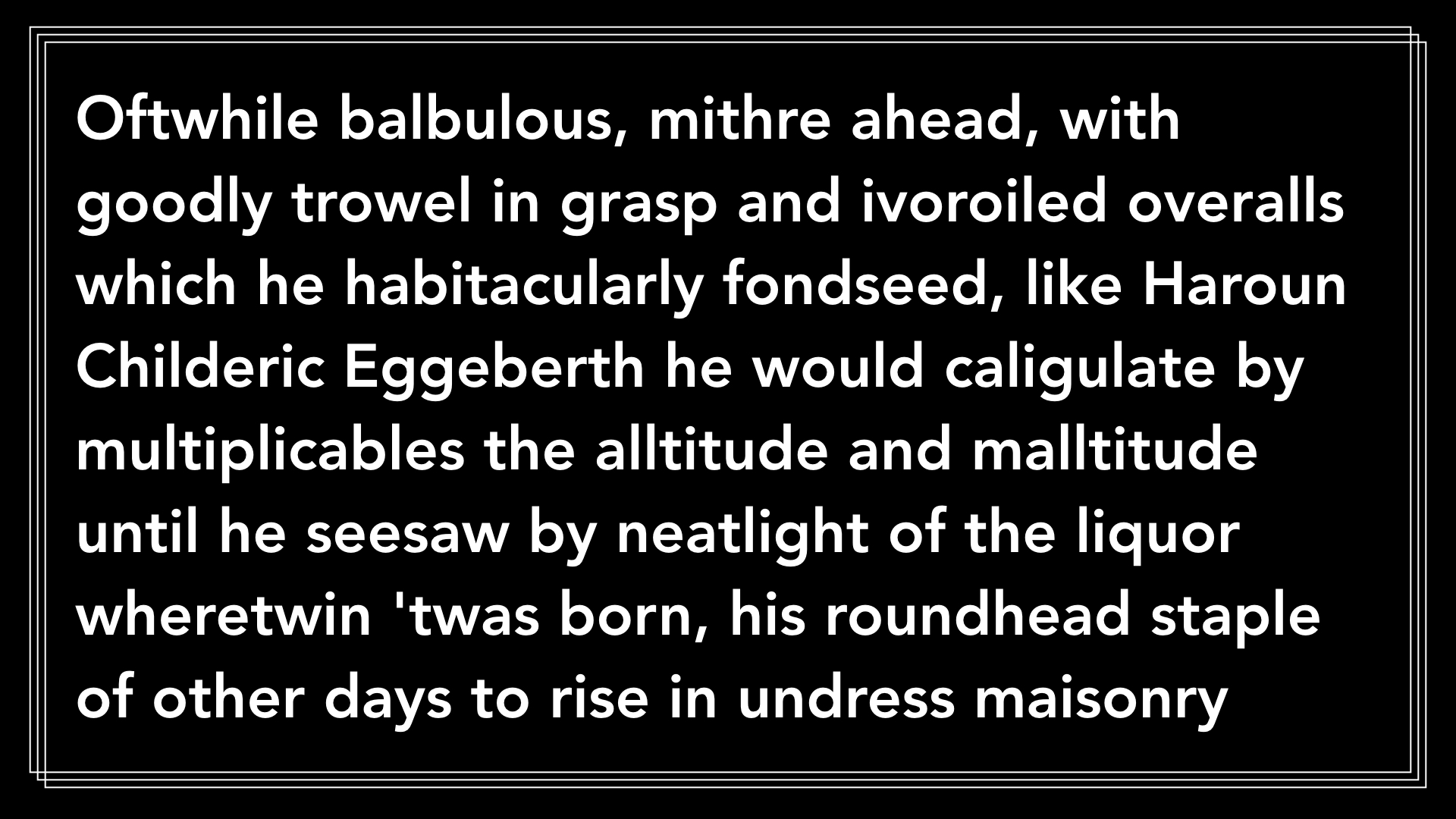

Back in 1939, James Joyce published Finnegan’s Wake, which was a book he specifically wrote to try to express communication in this low-level limbic part of the brain, the somewhat conscious mind. He called the prose style ‘the language of the night’. It looked like this.

As you can see, if you read English: it's not clear enough to read—that is, legible—though if you try to read it, it is comprehensible. But it's tiresome to muddle through, and that was the opposite of Joyce's intent; he meant it to be inherently intutive, much more so than conventional language. Alas. His patron Harriet Shaw Weaver, his friend Ezra Pound, and even HG Wells, for god's sake, all knocked him for writing Finnegan's Wake.

But I’ll give him credit because he was trying to take what he knew of language as a shared base experience into something even more low-level in the brain: more animal, bypassing higher-level thought, which is exactly what user experience and environment designers, architects, industrial designers and game makers do as well, every single day.

What's the difference? Why do they succeed where he failed? A few reasons; all of the above designers work with space, motion, and physicality as well as words. Written language is too high level, too abstract; it requires pre-frontal processing. You can design a subconcious experience with visuals and sound much more easily. This is part of why the rise of animation in the 20th century was, in its way, as important as the rise in literacy: it lead to a new form of literacy and shared understanding.

For example, Dumbo came out in 1940, just after Fantasia, and had some genuinely unique, almost disturbing imagery in the Pink Elephants sequence. Here's an example: someone said: "I'm going to draw a marching elephant made of elephant heads," and then they drew it, and animated it, and for better or worse, now we all get what that meant to them, much more so than what James Joyce was trying to write.

I don't like it, but here we are, with a shared understanding of what that animator meant. Thanks, technology.

Obviously, we have been able to express much, much more using computers. And here's the best part: not only can we express our ideas with computers, but we can be sure others understand them in the way we meant, with higher fidelity. This is not possible with the written word.

This is exciting, because it means humans have moved beyond words, and even images, to animated literacy; able to recall things that are expressed over time with high visual fidelity. We have always been able to do this in theory, but have only recently been able to take full advantage of it with complex concepts.

The more better faster we can talk to each other and get the point across, the more humans who can expertly use computers because they are well-designed, familiar and accessible, the sooner we become galactic citizens. And we are getting there: computers are getting closer and closer to becoming true partners in knowledge, not just simple calculation machines.

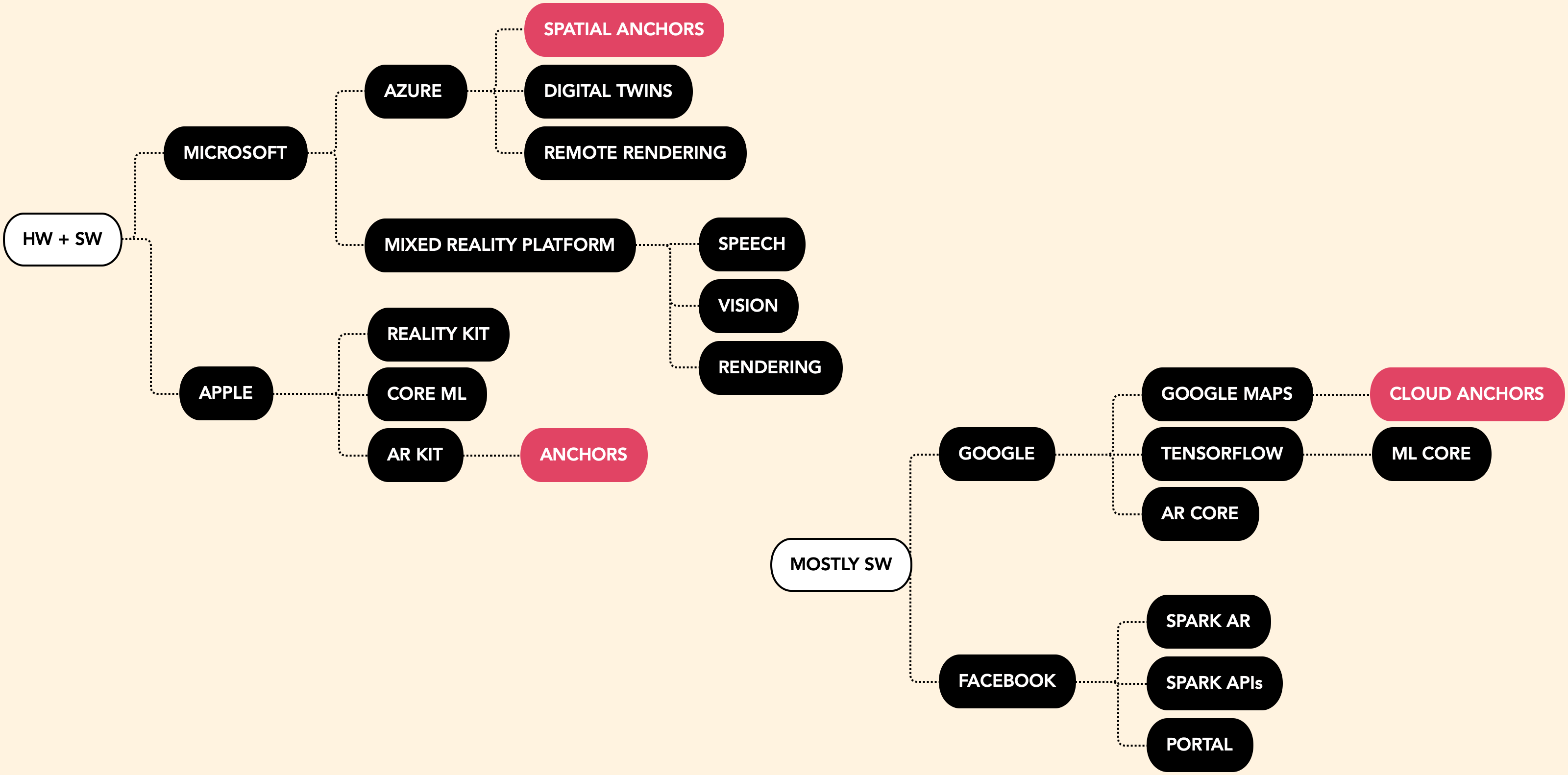

I learned of a concept called the ‘half life of technology intimacy’ from the Lux Capital folks, and I like the concept a lot. Computers started off in other buildings, then moved to one's desk, then to a pocket, and now a wrist or face, but they are not necessarily proportionally better. I’ve got a house full of computers, as you can see from this essay, and they are all around me. But what I want them to do is clearly not what they do, or I wouldn’t have to scribble drawings on photos.

There is clearly a gap. I interact with computers more than I interact with any human, but the operating systems and applications I use are too general purpose, too generic, for me to use as intimately as I could to really be able to transmit my thoughts to them quickly, precisely, and effectively.

Now I know a large majority of folks who may read this essay understand, love and use computers and professional software all the time. But I also know you’re constantly making pipeline tools, extensions, custom builds, because what you have is not quite good enough, doesn’t fit the person, doesn’t fit the use case, doesn’t fit the game or movie or app you’re trying to make.

Imagine how much more uncomfortable that is when we think about this half-life of intimacy. Computers are getting closer and closer, more ubiquitous and ephemeral as ever. We can’t just keep making custom pipelines. We have to think about this systemically so that everyone can have a comfortable, custom computer. We have to…human computers.

All software, from Siri to your spatial OS, needs this level of basic human knowledge and context so that we can interact with them in useful ways. I think we have this artificial categorization now that only things that look human will need to act human, by which I mean being kind, open to feedback and being able to react to it, be patient, and understanding of where we’re at and what we want.

But the reality is we’re interacting with computers now as much or more than other humans. All of these things need to have context around what we’re doing, so we get that knowledge out of our heads and into the shared pool with shared context. Otherwise we just end up with frustrated or confused users, and computers seem as obtuse, annoying, and hard to use as they do to most of the world today. The closer we get to computers, the more human—the more context-aware, the more forgiving—they will have to be.

With ubiqitous computing, computers are increasingly intimate with us. This means they’re increasingly annoying, too; just think of your average smart assistant. If we don’t get this right, people will never feel that they can use a computer to the full extent of their abilities. And that is why I care.